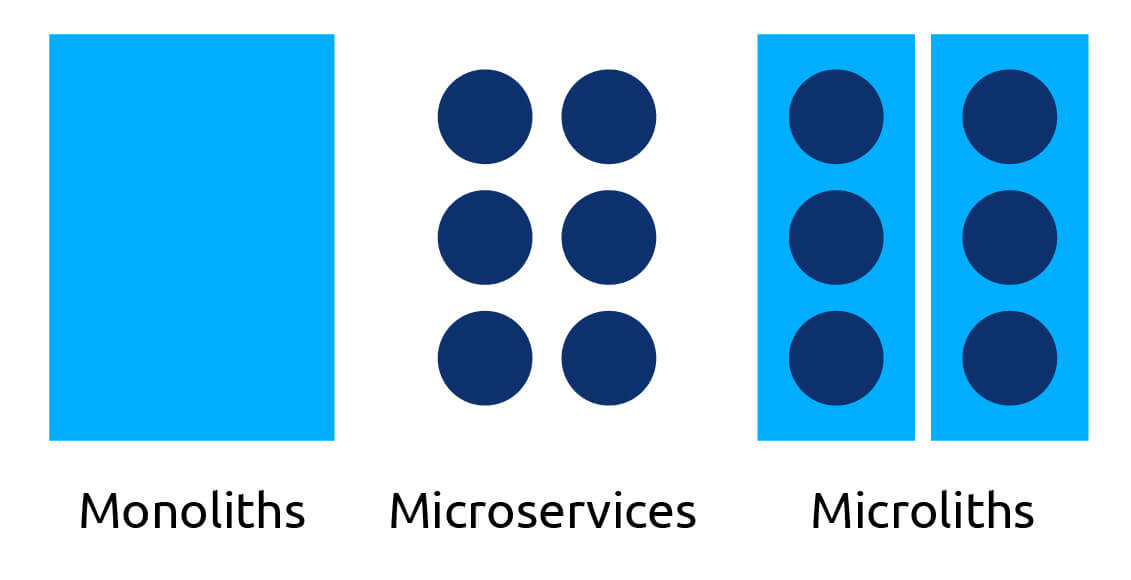

Software architecture has evolved through alternating phases of complexification and simplification. From single-server rooms, to racks of clusters, to cloud infrastructure, to cloud platforms, to cloud services; from monolithic software systems, to microservices, to serverless, and function-as-a-service, each model emerged to address the scaling and coordination challenges of the one before it.

Yet each also introduced its own challenges: increased latency, orchestration complexity, and operational overhead. What has become clear is that scaling distributed systems efficiently is not a matter of adding more layers or breaking systems down into smaller components, but of reducing complexity.

The Distributed Microlith represents that realization. It is an architectural component that is unified, remains coherent, and scales globally, creating a high performance, distributed platform. It preserves the simplicity of a single runtime while allowing independent teams to develop and deploy modular components within it.

Unified Systems, Independent Teams

A distributed microlith looks monolithic from the outside but behaves modularly within. Teams can still work autonomously—each building, testing, and merging their own components—yet everything executes in the same runtime. Instead of separate services communicating over APIs, functions interact directly in the same process. This eliminates serialization and communication overhead, making each operation as fast as a local call.

The effect is measurable. Internal benchmarks have demonstrated that when network communication is removed, a unified runtime can achieve performance at the sub-millisecond level. Latency is reduced not by optimization tricks, but by removing the network itself from the equation. In practice, distributed microliths significantly reduce response times for dynamic workloads while maintaining a coherent codebase that teams can collectively reason about.

This efficiency compounds as systems grow. Every avoided network call prevents cost—less infrastructure, fewer failure points, and dramatically simpler observability. Teams spend less time maintaining pipelines and more time refining products.

Efficiency Through Structure, Not Scale

Traditional distributed architectures scale by multiplying the number of systems. The distributed microlith scales by replicating structure. Each node in the network runs the same unified process, managing local requests while synchronizing state with others through a shared fabric. This active-active pattern yields high throughput and redundancy without central coordination.

The implications are significant. When deployed across clouds or regions, each node operates autonomously yet remains part of a cohesive system, allowing traffic to be routed to the closest deployment. Outages in one region are automatically isolated, so service continuity is maintained across the rest of the network. Because data and logic are integrated, edge nodes can serve users locally without relying on distant APIs.

The result is a system that outperforms specialized high-compute networks while maintaining a fraction of their infrastructure footprint. It is at once simpler, faster, and more resilient.

A Model Proven in Practice

These principles are not theoretical. Platforms like Harper have demonstrated them in production across multiple domains. In e-commerce environments, Harper nodes have been used to pre-render cache and dynamically inject real-time data at request time for product pages, reducing median page load times by over 70% without introducing a CDN layer. In real-time applications such as live sports tracking and flight monitoring, Harper’s unified runtime processes messages, data storage, and distribution within the same memory space, eliminating inter-service delays.

At a global scale, Harper Fabric extends these runtimes into a coordinated mesh. Each node can read and write independently while maintaining synchronization across regions and clouds. The same application logic runs everywhere, automatically distributing workloads and balancing latency for users. What emerges is a multi-cloud, active-active topology that offers redundancy beyond what any single-provider system can achieve, without the complexity of orchestration frameworks, queue-based replication, or costs growing without visibility.

The architecture demonstrates that distributed systems do not need to be disassembled to scale. Simplicity, properly constructed, scales further and faster than fragmentation ever could.

The Strength of Simplicity

The distributed microlith does not abandon modularity or autonomy; it refines them. It demonstrates that independence and cohesion can coexist when structure supplants infrastructure as the organizing principle.

What began as an effort to unify runtime layers has become a broader realization: simplicity is not a constraint on scale—it is the method by which scale remains sustainable.

Harper and Harper Fabric are the living proof of that idea. They embody a system where data, logic, and distribution operate as one, expanding naturally from a single process to a global network. For teams shaping the future of distributed applications, the takeaway is clear: the most enduring systems begin simply and stay unified as they grow.

.jpg)